Apache Spark

- How To Run Spark Applications On Windows - SaurzCode

- Hadoop - Winutils - Datacadamia

- See Full List On Github.com

- Spark 1.6-Failed To Locate The Winutils Binary In The Hadoop ...

Click the New button to open the Set the VM Argument dialog box and add the argument to indicate the directory where the bin folder of the winutils.exe program is located. In this example, the argument is -Dhadoop.home.dir=C:/tmp/winutils. Click OK to validate this change and then you can see this new argument appear in the Argument list. Winutils.exe -help Usage: hadoop bin winutils.exe command. Provide basic command line utilities for Hadoop on Windows. The available commands and their usages are: chmod Change file mode bits. Usage: chmod OPTION OCTAL-MODE FILE or: chmod OPTION MODE FILE Change the mode of the FILE to MODE. Oct 08, 2019 winutils.exe hadoop.dll and hdfs.dll binaries for hadoop windows - cdarlint/winutils.

Spark is an in-memory cluster computing framework for processing and analyzing large amounts of data.

Steps to Install Spark in Windows.

Winutils Windows binaries for Hadoop versions These are built directly from the same git commit used to create the official ASF releases; they are checked out and built on a windows VM which is dedicated purely to testing Hadoop/YARN apps on Windows. It is not a day-to-day used system so is isolated from driveby/email security attacks. Winutils is required when installing Hadoop on Windows environment. Hadoop 3.3.0 winutils I've compiled Hadoop 3.3.0 on Windows 10 using CMake and Visual Studio (MSVC x64). Follow these two steps to download it.

Step 1: Install Java

You must have java installed in your system.

If you dont have java installed in your system, download the appropriate java version from this link.

Step 2: Downloading Winutils.exe and setting up Hadoop path

- To run spark in windows without any complications, we have to download winutils . Download winutils from this link.

- Now, create a folder in C drive with name winutils. Inside the winutils folder create another folder named bin and place the winutils.exe .

- Now, open environment variables and click on new. And, add HADOOP_HOME as shown in the pic.

- Now, select path environment variable which is already present in the list and click on edit. In the path environment variable, click on new and add %HADOOP_HOME%bin in it, as shown in the picture below.

Step 3: Install scala.

- If you dont have scala installed in your system, Download and install scala from the official site.

Step 4: Downloading Apache Spark and Setting the path.

How To Run Spark Applications On Windows - SaurzCode

- Download the latest version of Apache Spark from official site. While writing this blog, Spark 2.1.1 is the latest version.

- The downloaded file will be in the form of .7.tgz. So, use software like 7zip to extract it.

- After extracting the files, create a new folder in C drive with name Spark ( or name u like) and copy the content into that folder.

- Now, we have to set SPARK_HOME environment variable. It is same as setting the HADOOP_HOME path.

Now, edit the path variable to add %SPARK_HOME%bin path.

Thats it… :). Spark Installation is done.

Running Spark

To run spark, Open a Command Prompt(CMD) and type spark-shell. hit enter.

If everything is correct, you will get a screen like this without any errors.

To create a Spark project using SBT to work with eclipse, check this link.

If have any errors installing the Spark, please post the problem in the comments. I will try to solve.

In this post i will walk through the process of downloading and running Apache Spark on Windows 8 X64 in local mode on a single computer.

Prerequisites

- Java Development Kit (JDK either 7 or 8) ( I installed it on this path ‘C:Program FilesJavajdk1.7.0_67’).

- Scala 2.11.7 ( I installed it on this path ‘C:Program Files (x86)scala’ . This is optional).

- After installation, we need to set the following environment variables:

- JAVA_HOME , the value is JDK path.

In my case it will be ‘C:Program FilesJavajdk1.7.0_67’. for more details click here.

Then append it to PATH environment variable as ‘%JAVA_HOME%bin’ . - SCALA_HOME,

In my case it will be ‘C:Program Files (x86)scala’.

Then append it to PATH environment variable as ‘%SCALA_HOME%bin’ .

- JAVA_HOME , the value is JDK path.

Downloading and installing Spark

Hadoop - Winutils - Datacadamia

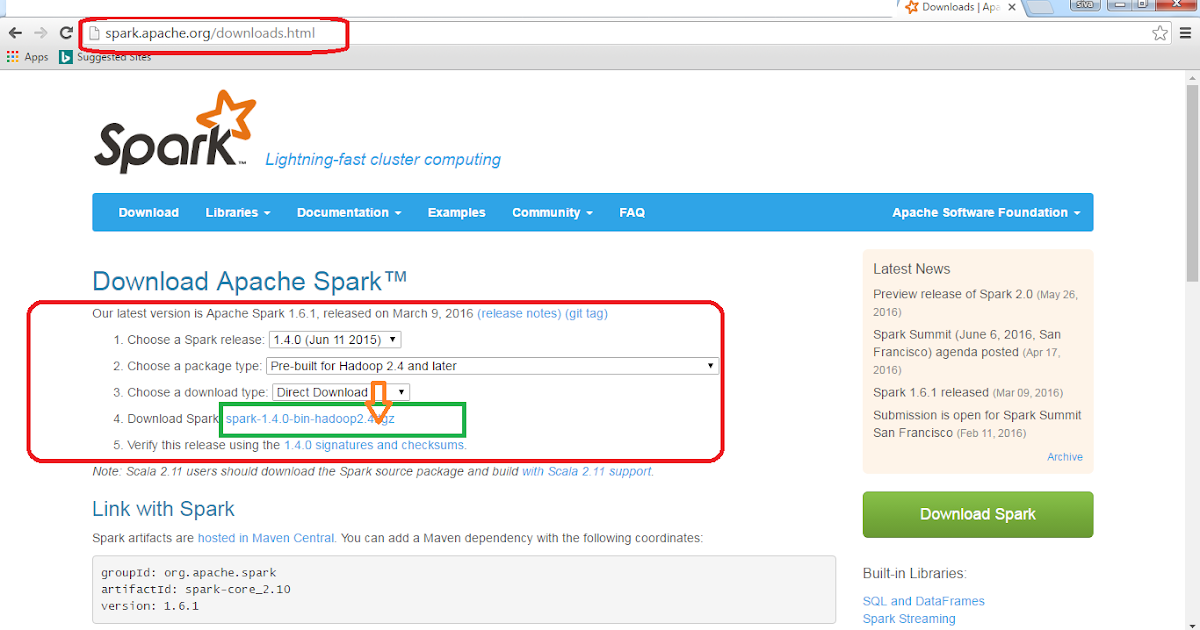

- It is easy to follow the instructions on http://spark.apache.org/docs/latest/ and download Spark 1.6.0 (Jan 04 2016) with the “Pre-build for Hadoop 2.6 and later” package type from http://spark.apache.org/downloads.html

2. Extract the zipped file to D:Spark.

3. Spark has two shells, they are existed in ‘C:Sparkbin’ directory :

a. Scala shell (C:Sparkbinspark-shell.cmd).

b .Python shell (C:Sparkbinpyspark.cmd).

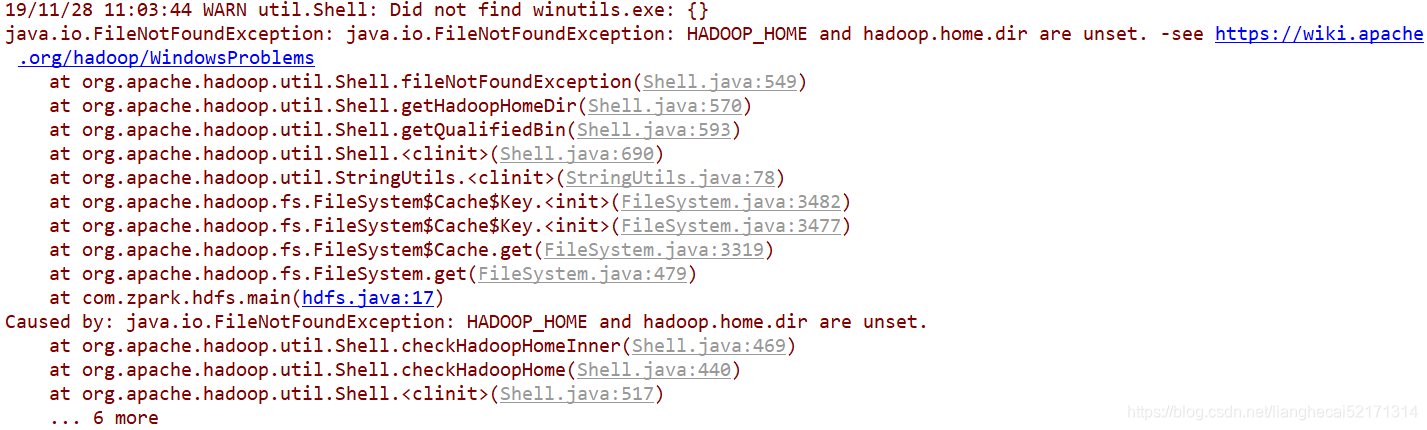

4. You can run of one them, and you will see the following exception:

java.io.IOException: Could not locate executable nullbinwinutils.exe in the Hadoop binaries.This issue is often caused by a missing winutils.exe file that Spark needs in order to initialize the Hive context, which in turn depends onHadoop, which requires native libraries on Windows to work properly. Unfortunately, this happens even if you are using Spark in local mode without utilizing any of the HDFS features directly.

To resolve this problem, you need to:

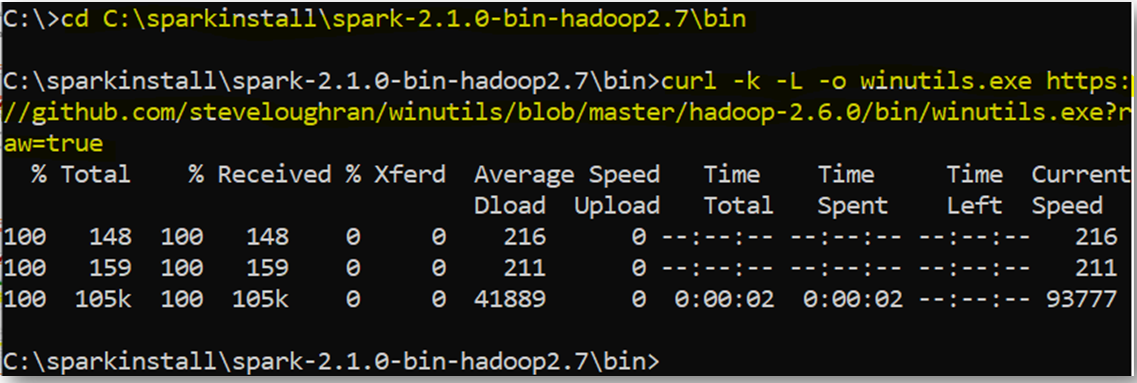

a. download the 64-bit winutils.exe (106KB)

- Direct download link https://github.com/steveloughran/winutils/raw/master/hadoop-2.6.0/bin/winutils.exe

- NOTE: there is a different winutils.exe file for the 32-bit Windows and it will not work on the 64-bit OS

b. copy the downloaded file winutils.exe into a folder like D:hadoopbin (or D:sparkhadoopbin)

c. set the environment variable HADOOP_HOME to point to the above directory but without bin. For example:

See Full List On Github.com

- if you copied the winutils.exe to D:hadoopbin, set HADOOP_HOME=D:hadoop

- if you copied the winutils.exe to D:sparkhadoopbin, set HADOOP_HOME=D:sparkhadoop

d. Double-check that the environment variable HADOOP_HOME is set properly by opening the Command Prompt and running echo %HADOOP_HOME%

e. You will also notice that when starting the spark-shell.cmd, Hive will create a C:tmphive folder. If you receive any errors related to permissions of this folder, use the following commands to set that permissions on that folder:

- List current permissions: %HADOOP_HOME%binwinutils.exe ls tmphive

- Set permissions: %HADOOP_HOME%binwinutils.exe chmod 777 tmphive

- List updated permissions: %HADOOP_HOME%binwinutils.exe ls tmphive

5. Re-run spark-shell,it should work as expected.

Text search sample

Spark 1.6-Failed To Locate The Winutils Binary In The Hadoop ...

Hope that will help !